Check out this beautiful piece by Kimchi and Chips showing an amazing combination of projection with 5500 block light fixtures, acting as individually addressable LEDs (using projected light instead).

Technical Setup:

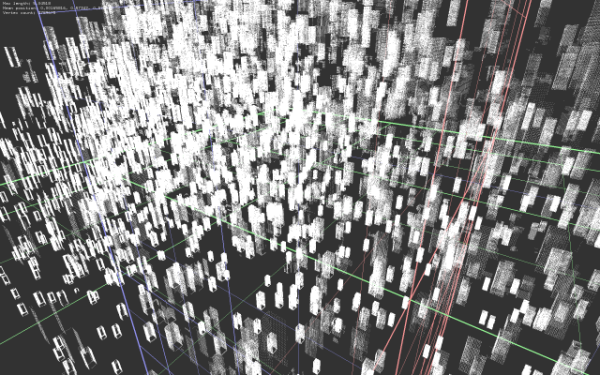

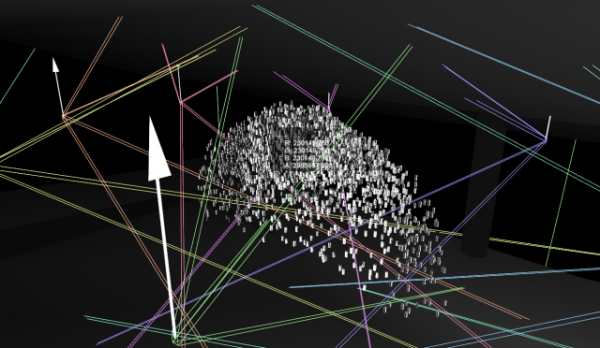

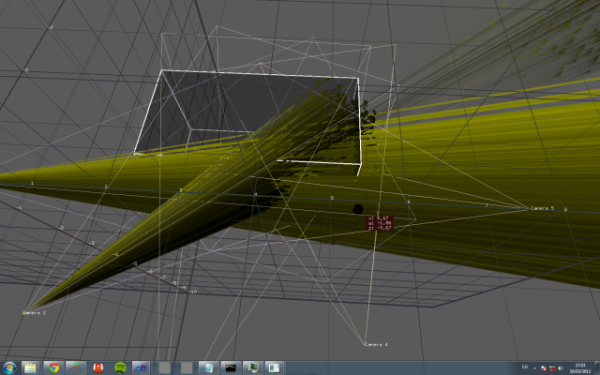

The astute designers use a structured light approach with a camera and projector to create a 3D scan of the scene. Five projectors are connected to a single computer, all simultaneously rendering the content in real time. Using OpenFrameworks, along with five high-res cameras positioned along with the projector, the structured light scan enables the designers to use the cameras to see for the projector, finding correspondences between camera and projector pixels that are used for triangulating the depth of the scene.

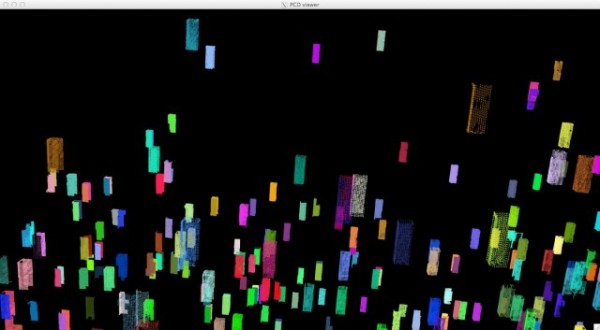

They even use the Point Cloud Library to fit cuboids to the locations of the physical blocks, accurately modeling the position and orientation of each cube.

Kimchi and Chips use the standard chessboard calibration process to find the lens parameters of the cameras. Once a structured light scan is performed, they define the calibration tree as a travelling salesman problem, finding routes between cameras and projectors through corresponding patterns. This information is used to understand which projector is optimally placed to project on different areas of the scene.

Each day, a start-up routine performs the scans and starts the calibration process with VVVV.

Platforms:

K&C use a number of software platforms to make this piece come to life. Chief among them are VVVV, openFrameworks and Cinema 4D. VVVV was used for pre-visualization, simulation, prototyping and run time display.

OpenFrameworks was used to do all the computer vision processing. Cinema 4D was used to animate and design all the 3D content, with custom Python scripts to render volumetric data as images in the final renderings. Perhaps most impressive was that most of the focus and research for their work was centered on developing interoperability between the platforms to plug together the various steps.

Code:

Being true champions of projection mapping, K&C have made all their code available on GitHub:

Project Files: VVVV projects | openFrameworks projectors

Applications: OpenNI-Measure : take measurements on a building site using a Kinect | Kinect intervalometer : apps for taking timelapse point-cloud recordings using a Kinect | VVVV.External.StartupControl : Manage installation startups on Windows computers

Algorithms: ProCamSolver : Experimental system for solving the intrinsics and extrinsics of projectors and cameras in a structured light scanning system | PCL projects

openFrameworks addons: ofxGrabCam : camera for effectively browsing 3d scenes | ofxRay : ray casting, projection maths and triangulation | ofxGraycode : structured light scanning | ofxCvGui2 : GUI for computer vision tasks | ofxTSP : solve Travelling Salesman Problem | ofxUeye : interface with IDS Imaging cameras

VVVV plugins: VVVV.Nodes.ProjectorSimulation : simulate projectors | VVVV.Nodes.Image : threaded image processing, OpenCV routines and structured light

In their own words:

A hemisphere of 5,500 white blocks occupies the air, each hanging from above in a pattern which repeats in order and disorder. Pixels play over the physical blocks as an emulsion of digital light within the physical space, producing a habitat for digital forms to exist in our world.

A group of external projectors penetrate the volume of cubes with pixel-rays, until every single one of the cubes becomes coated with pixels. By scanning with structured light, each pixel receives a set of known information, such as its absolute 3d position within the volume, and the identity of the block that it lives on.

The spectator is invited to study a boundary line between the digital and natural worlds, to see the limitations of how the 2 spaces co-exist. The aesthetic engine spans these digital and physical realms. Volumetric imagery is generated digitally as a cloud of discontinuous surfaces, which are then applied through the video projectors onto the polymer blocks. By rendering figurations of imaginary digital forms into the limiting error-driven physical system, the system acts as an agency of abstraction by redefining and grading the intentions of imaginary forms through its own vocabulary.

The flow of light in the installation creates visual mass. The spectator’s balance is shifted by this visceral movement causing a kinaesthetic reaction. For digital to exist in the real world, it must suffer its rules, and gain its possibilities. The sparse physical nature of the installation allows for the digital form to create a continuous manifold within the space across the discreet blocks, whilst also passing through each block as a continuous pocket of physical space.

The polymer blocks are engineered for both diffusive/translucent properties and to have a reflective/projectable response to the pixel-rays. This way a block can act as a site for illumination or for imagery.

The incomplete form of the hemisphere becomes extinct at its base, but extends through a reflection below, and therein becomes complete. It takes inspiration from nature, whilst becoming an artefact of technology.

Permanently installed in Nakdong river cultural centre gallery in Busan, Republic of Korea.

See the Making of:

Making of “Assembly” from Mimi Son on Vimeo.

Credits:

Kimchi and Chips: Mimi Son and Elliot Woods

Production staff: Minjae Kim and Minjae Park

Mathematicians: Daniel Tang and Chris Coleman-Smith

Videography: MONOCROM, Mimi Son, Elliot Woods | Music by Johnny Ripper

Manufacturing: Star Acrylic, Seoul and Dongik Profile, Bucheon

CreativeApplications